Apple Music hits hard.

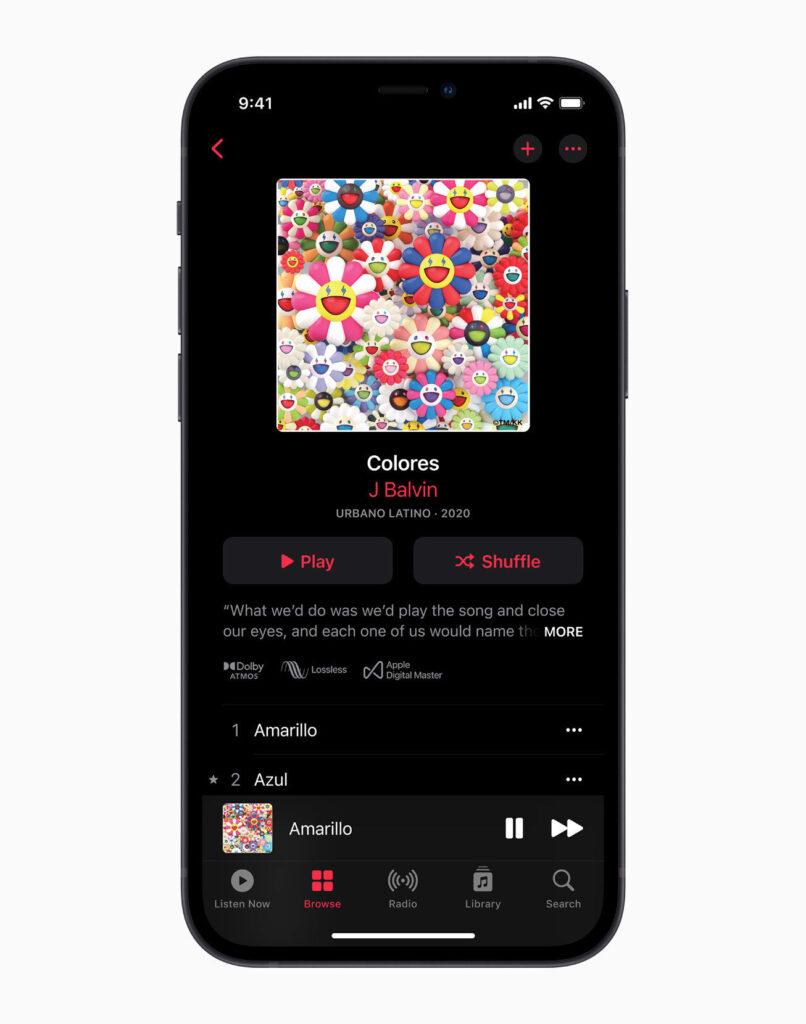

Not long ago, Apple announced that it would provide a “lossless” sound quality option for the entire Apple Music library and launch a “spatial audio” function based on the Dolby Atmos standard. And all lossless, spatial audio content will be directly included in the Apple Music subscription at no extra charge.

For the entire streaming music industry, this is tantamount to a dimensionality reduction blow. Another major Hi-Fi music service, Tidal, also provides a “high-resolution lossless” music service, which charges twice the subscription fee for “lossless sound quality” at $20 a month. Spotify, the world’s largest music service by users, does not have a lossless option.

Apple Music’s support for lossless news has triggered a heated discussion in public opinion. Everyone soon discovered that Apple’s own headphones and speakers do not support “lossless” playback, including the AirPods Max, which was launched just last year and focused on “high-fidelity sound quality.” The discussion gradually fermented into controversy. On the 24th, Apple quickly launched a Q&A page to answer questions related to “lossless sound quality” in detail. Explains why Bluetooth headsets cannot support lossless, and promises to support lossless for HomePod speakers in subsequent software updates.

Since the iPod era, Apple has never been cold about “lossless”. Apple’s product philosophy emphasizes thinness, comfort, and no burden, which is completely contrary to the practice of music enthusiasts “for the pursuit of sound quality, you can give up everything.” In recent years, Apple has been vigorously promoting wireless products and sold more than 200 million AirPods. They were obviously not designed to “hear losslessly.”

Is the introduction of “lossless” sound quality at this time a “contradictory” for Apple? What does it mean?

Anti-lossless Apple

Apple is a company that made its fortune in the music business, but it has never done a Hi-Fi business.

When Jobs released the iPod 20 years ago, his core focus was “efficiency.” He compared CD, flash MP3 and other players, and emphasized that the core advantage of the iPod is that it has a built-in 5G hard drive capable of storing 1,000 songs. These 1,000 songs are certainly not CD-quality lossless music.

Jobs’ goal for the iPod is simple: let users carry their entire music library with them. Around this purpose, the iPod has become thinner and lighter, and its capacity has become larger and larger. In the following years, Apple introduced the lightest iPod shuffle with only 11g, and also introduced the iPod classic with a capacity of 160G and capable of holding 40,000 songs.

From this perspective, it is easy to understand why Apple has not been doing a lossless Hi-Fi business. Apple products need to be light and portable, efficient and easy to use, and most Hi-Fi devices require large-volume audio files, complex decoding, amplifying circuits, high-impedance headphones, and high-power The power is driven, and finally falls on the product, which is bound to be cumbersome.

Apple’s focus on efficiency and portability does not mean that it doesn’t care about “sound quality.” In 2003, when Apple launched the iTunes Music Store, it abandoned mp3, the industry’s most mainstream digital sound format, and chose a more advanced, more efficient, but relatively niche AAC encoding. The entire chain of hardware, software, and services is in your hands, allowing Apple to embrace more advanced technology.

The earliest Apple adopted the 128kbps bit rate standard, and a 4-minute song was only about 4M in size. Later, with the increase in product storage capacity, Apple gradually upgraded the code rate standard for song packaging to 192 and 256kbps.

256kbps code rate, AAC encoded music, has been unanimously recognized by users and the industry. In terms of parameters, the theoretical sound quality of 256k AAC can even be better than 320k mp3. The human ear is basically difficult to distinguish the sound quality gap between 320k mp3 and lossless CD, at least on consumer audio equipment. Only a few audiophiles can hear the nuances of the two through repeated AB comparisons through excellent playback equipment.

Since it was finalized in 2007, 256k AAC has become the gold standard of Apple’s music ecology. From iTunes to Apple Music, it took 14 years to use it.

In 2017, Apple cancelled the headphone jack on the iPhone 7 and launched AirPods Bluetooth headsets. Phil Schiller referred to this approach as a “courageous decision” at the press conference because Apple believes that wireless can bring a better experience. In the first-generation product promotion video, Jony Ive said, “We believe in a wireless future.” (We believe in a wireless future.)

Bluetooth wireless headsets are more difficult to support playback of lossless music. At present, the highest bit rate supported by mainstream Bluetooth protocols is mostly between 328kbps and 576 kbps. Even the high bitrate protocol LDAC launched by Sony only supports 990kbps at the highest, which is far behind the 1411kbps required for CD losslessness. Moreover, the higher the bit rate of the Bluetooth protocol, the lower the stability and transmission distance, and the higher the delay, which will lead to compromise inexperience.

AAC audio encoding is the core of Apple’s music ecology, so Apple also uses AAC encoding transmission on its own Bluetooth headsets such as AirPods and Beats. Compared with most Android phones connected to Bluetooth headsets, this method can save the process of decoding and encoding once, improve the efficiency of signal transmission and conversion, and provide a high-quality, low-latency audio experience as much as possible.

Whether it’s the iPod era in order to make products as portable as possible, or the iPhone era embracing wireless technology, Apple is a company that emphasizes “music experience” rather than “Hi-Fi parameters.” To some extent, you can even say that it is the pioneer of “against losslessness.”

Support Lossless Apple

For 20 years in the music business, Apple has also tried Hi-Fi once or twice.

In 2004, in response to the needs of CD listeners at that time, Apple introduced the Apple Lossless lossless encoding format. Users can convert digital CD files losslessly into a format supported by iPod and put them on the iPod for listening. Moreover, the 30-pin interface used by iPod and early iPhone supports analogue signal output. Users can also connect the iPod to an audio amplifier for lossless output to speakers.

In 2006, Apple also introduced a speaker called iPod Hi-Fi, which sold for $350. But Hi-Fi is a niche demand after all, and the positioning of this speaker is a bit “not up to down”, and it does not match Apple’s product temperament. In the end, the sales performance was not satisfactory. More than a year later, iPod Hi-Fi was silently discontinued. This is also Apple’s last attempt to enter Hi-Fi.

Although the ALAC lossless encoding format has long been introduced, Apple has never sold “lossless music” in the iTunes store. One of the important reasons lies in the reluctance of record companies. Before 2010, physical CD sales were still a good business. If the music sold in the iTunes store can reach CD quality, it may hurt physical CD sales.

However, as the sales of physical records have shrunk year by year, streaming media has long become the main source of income for the recording industry. For record companies, licensing higher-quality music to Apple is no longer a difficult decision. And the capacity of the iPhone is getting bigger and bigger, 5G brings faster transfer speed, lossless music huge file size no longer will bring too much extra burden to users.

The lossless music that Apple will provide on Apple Music this time is based on the ALAC encoding format. In addition to CD-level lossless sound quality, Apple will also provide 192kHz / 24bit “high resolution lossless”. The “information” that an audio file of this specification can hold is theoretically equivalent to 5 times that of a CD. Apple’s device itself does not even support decoding. Users need to connect an external decoder to listen.

For Apple, as long as it has the permission of the record company, providing users with non-destructive content is “a little effort.” Because Apple has long required record companies to submit high-specification “digital audio source” to them, which requires the recording specifications to reach at least 44.1kHz, native 24bit. These master tapes are stored in the Apple Music database, and Apple only needs to package them in a lossless standard before providing them to users.

In a very early technical document, Apple explained why it should ask record companies for high-quality master tapes: “As technology advances, bandwidth, storage capacity, battery life, and processor performance will increase. We can use these master tapes. The quality advantage of the band enhances the music experience.” Obviously, Apple has already planned to provide higher-quality music.

For Apple, “providing lossless content” is very simple, but it is not necessary to integrate lossless content with its own hardware and software products. Over the past ten years, Apple has made various software and hardware optimizations for music, all of which are based on the lossy AAC coding standard.

For example, Apple vigorously promotes 24-bit bit-depth recording specifications. Technically, a greater bit depth means that the sound can have a greater total dynamic range. Sounds of different sizes will be recorded more accurately.

The reason why the sound is required to have a larger dynamic range, on the one hand, is that Apple can have more processing space when AAC encoding is suppressed. On the other hand, this also makes it easy for Apple to optimize the sound at the hardware level for different product characteristics. On AirPods, Beats headphones and HomePod speakers, Apple will perform different “adaptive processing” on audio signals to optimize the frequency response and provide a sound experience suitable for different scenarios.

This explains to some extent, why almost all of Apple’s own headphones and speakers do not support lossless. Because Apple’s entire audio experience is based on AAC, from mastering standards, encoding and packaging, to Bluetooth transmission, and optimization for different devices… The idea of computing audio has been carved into the product’s DNA, like AirPods Max, Even if it is connected to a cable and directly input a lossless audio signal, the headset will first convert it into a digital signal, after a pass of calculation, optimization, and then into sound, the whole process cannot be lossless.

If you want to provide a better music experience, it is more than just “packaging the master tape into a lossless format and sending it to the user”. The key issue is how to upgrade the sound experience based on higher recording, production, and playback standards.

The “Spatial Audio” function launched with lossless this time points out a direction: Apple is trying to work with Dolby to upgrade traditional two-channel stereo songs to 5.1 channels.

Growing up on Apple Music

Things like “Apple Music support lossless” may not be done by Apple before.

Apple is the company that emphasizes the “integration of software and hardware services” the most in the world. In order to solve a demand, Apple generally creates a set of solutions from start to finish. When Apple built the iPod, it had to use its own AAC encoding standard to sell music in the iTunes Store. Although Apple Music has supported Android and Windows since its release, everyone knows that it can get the best experience on Mac and iPhone with AirPods and HomePod.

Now, Apple’s strategy seems to be changing. Apple Music’s “lossless sound quality” requires wired headphones and active speakers to achieve the desired effect. The highest standard of 192KHz even requires an external decoder. This is a fever device that most ordinary users will not touch, and Apple does not do this. Kind of equipment.

In 2018, Tim Cook stated in an interview that music is not about “bits and bytes”, but about humanity and the beauty of art. At that time, he emphasized that Apple Music’s playlists were “manually selected” by the editor, and seemed to also imply that Apple had no intention of participating in the arms race for sound quality.

Three years later, the incremental effect of the market is declining. Apple, Amazon, Google, Spotify, the user growth rate of various music services are slowing down, the era of enclosing land is coming to an end, and Apple has not circled the biggest piece. As of June 2020, Apple Music has 72 million paid subscribers worldwide. In contrast, Spotify has 345 million subscribers and 155 million paid subscribers. Apple Music has made great progress in markets with high iPhone popularity, such as the United States and Japan, but in Europe, its user growth rate is far less than Spotify.

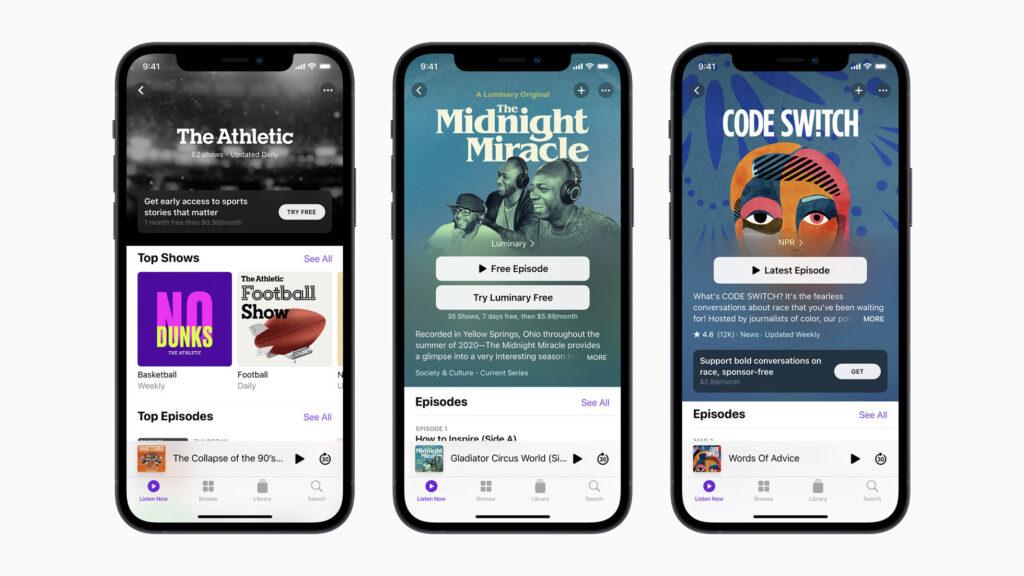

Spotify is also trying to attack the weak lines of defence of the Apple empire. Last year, it spent hundreds of millions of dollars to lay out podcast content, signed an exclusive distribution agreement with the anchor, and tried to provide a “one-stop audio content experience.” At the same time, Apple’s podcast’s market share in the United States has dropped from 34% to 23.8% since 2018. At the spring conference that just ended last month, Apple announced a paid subscription feature for podcasts, which was considered a response to market changes.

Apple Music is competing head-on with its opponents in a more aggressive manner. It supports lossless and no additional charges. It will have more confidence in the face of competitors such as Spotify and Tidal: Apple Music can not only satisfy users in the Apple ecosystem but also satisfy those relatively small and complex users as much as possible. Audiophile needs.

In addition to broadening the user base, Apple is also trying to establish a good relationship with the upstream. In April of this year, Apple Music sent a letter to record companies and labels. In the letter, Apple explained how Apple Music and artists split the accounts, stating that every time a user plays a song, Apple will pay the artist about one cent in royalties, which is equivalent to two to three times that of Spotify. Spotify has brought more revenue to the music industry because of the greater number of users and playback, but Apple is still trying its best to maintain a good relationship with record companies, at least not to lag behind its competitors. In the letter, Apple also promised that it would not do the traffic business of record companies and would not sell the display resources in the Apple Music App.

From the perspective of service strategy, Apple is becoming more and more open and more “pragmatic.” In addition to the lossless sound quality of Apple Music, Apple TV also supports TV boxes from Amazon and Google, TVs from Samsung, LG, and Sony, as well as PS5 and Xbox. They have become more independent, no longer just a piece of the puzzle in the Apple ecology.

This change is not a product contradiction. Apple Music is still closely integrated with Apple’s own software and hardware, and even more closely integrated than before. The “Spatial Audio” function that will be launched soon needs to be implemented with Apple’s self-developed H1 and W1 chips. Apple is still defining demand, but it no longer monopolizes demand.

Like 20 years ago, when Jobs explained why he made an iPod, he said, “Well, we love music.” But it’s not only Apple users who love music, there are more people. Now, Apple wants to make friends with them.